AI in Warfare: What You Need to Know in 2025

Let's get smarter about AI in warfare

Author’s note: Before we jump in, I just wanted to thank my paid subscribers who make this work possible. I wouldn’t be able to devote a full month to writing a single piece (yes, this mammoth AI in warfare primer took me 28 days) unless I had the financial backing of my awesome readers. I hope this document is helpful. I’ve tried to keep my signature snark to a minimum. I’ve asked my wife to proofread this article, so any grammar errors are entirely her fault (I’m kidding). I’ve tried to section this article in a way that makes sense, at least in my mind. I considered making this available only to paid subscribers, but after much thought, I think everyone needs to read it. Stay frosty.

The Age of Autonomous War

Picture this: It’s just past midnight on a bombed-out stretch of Ukrainian highway. Somewhere overhead, a quadcopter the size of a pizza box is silently circling, its battery powered by DJI lithium cells and its “brain” a slab of Nvidia silicon that, until recently, was probably destined for a self-driving car or a Japanese vacuum.

On the ground, a Russian T-72, already missing most of its side skirts and, if you listen closely, its driver’s last nerve, tries to hide in the shadow of a roadside billboard advertising cheap dental implants.

In the old wars, that tank might’ve had a fighting chance, especially at night. In this war, a one-thousand-dollar drone is about to strike without any human input, and it won’t even need to ask permission.

The drone uses its neural net to perform an image recognition sweep by “remembering” its training data: thousands of videos and images collected over years of fighting and reinforced for adaptive targeting.

It identifies the Russian tank as hostile in six milliseconds.

A mere millisecond later, the AI sends a query to its “decision engine” for a “yes, kill” or “no, don’t kill” order.

The machine’s confidence is high. It issues the kill order and transmits its geolocation via Link16 for damage assessment drones to find the tank when the sun comes up.

The drone then dives into a known structural weak point on the Russian tank, incinerating itself and setting off the tank’s ammunition.

Welcome to the age of autonomous war.

If you take nothing else from this piece, my friends, this is the big battlefield change that AI brings: The speed of the kill chain (find, fix, track, target, engage, assess) has been accelerated from days or weeks to seconds or milliseconds.

If you still think “AI in combat” means an army of Terminators marching out of the Skynet factory, I’m sorry to disappoint you. There are no chrome-plated skeletons on the Zaporizhzhia front.

The battlefield AI revolution is about cheap, disposable brains on a circuit board, scattered across every battlefield from Ukraine to Gaza to the Red Sea, quietly and ruthlessly accelerating the pace of destruction.

AI in warfare is here. Not “around the corner,” not “sometime in the 2030s,” not “pending further debate at the UN.”

The technology is live, it’s lethal, and it’s rewriting the rules of combat in real time, no matter how many press releases, think tank PDFs, or grimly optimistic defense expos say otherwise.

You don’t need to take my word for it: just ask the Russian logistics teams who now spend more time scanning the sky for loitering munitions than loading trucks, or the IDF patrols in Gaza who can’t move fifty meters without triggering an AI-driven sensor web that knows the alleyways better than the locals.

But let’s get the obvious out of the way: AI isn’t just some shiny appendage tacked onto yesterday’s missiles. It’s now becoming embedded in everything that moves, sees, listens, or strikes on a modern battlefield.

Drones fly their own missions, jamming gear listens and adapts in milliseconds, and targeting algorithms decide who or what gets hit and when. Some of this tech is Pentagon-certified, but most is built in garages, hacker collectives, or by “war entrepreneurs” who, a year ago, were running Etsy stores and now head up the defense startup scene in Kyiv or Haifa.

Ukraine’s war is the most visible, but it’s hardly the only AI arms race. In Gaza, AI-powered loitering munitions and surveillance networks are used to track, target, and, occasionally, spare civilians, often in the same breath.

In the Red Sea, AI-enabled drone swarms from Yemen’s Houthis have turned the world’s busiest shipping lanes into a live-fire lab for every naval force with a press office and a checkbook. The US and China, caught somewhat by surprise by AI’s rapid development, are learning, copying, and iterating as quickly as they can.

But the battlefield isn’t the only thing that’s changed. The whole tempo of war, the speed at which you go from “maybe that’s an enemy” to “they’re definitely dead,” has shifted so radically that the human mind is quickly becoming the slowest component in the loop.

In Ukraine, a drone pilot can go from launch to target in under five minutes; the onboard AI can process video, identify vehicles, and guide itself in real time, with zero human oversight after liftoff.

You can argue over the ethics of this all you want, but good luck outpacing the machine.

Of course, every new war tech gets its moment in the hype sun. Remember when cyberwar was going to turn every future battlefield into a deleted scene from Mr. Robot? Or when every analyst thought that precision-guided munitions meant wars would be over in weeks, with zero collateral damage and immaculate body counts?

AI is the new darling, but it comes with a level of self-replicating, open-source chaos that makes the old hype cycles look quaint.

Don’t get me wrong: some of the breathless headlines are pure vapor. The world isn’t full of truly autonomous “hunter-killer” bots making life-and-death calls with no human oversight, yet.

For every viral clip of a drone autonomously dive-bombing a Russian tank, there are a hundred more where human pilots, spotters, or data annotators are still involved somewhere in the loop. But that’s cold comfort, because the loop is shrinking. The number of moments where a human gets to pause, check, or veto an AI decision is collapsing under the weight of operational necessity.

The war doesn’t wait, and neither do the drones.

If you’re a civilian, it’s easy to think AI in warfare is an overhyped sideshow; a tech arms race for bored procurement officers and giddy Silicon Valley VCs. You’re not wrong to be skeptical. For decades, defense contractors and marketing jackals have slapped “AI-powered” on everything from missile interceptors to logistics apps, hoping nobody would ask what it actually meant. But this time, the changes are tangible, and they reach far beyond the trenches.

First, speed: The AI revolution in war is about compressing the sensor-to-shooter timeline to near-instantaneous. In practical terms, this means fewer safe havens, less time to react, and a battlefield where hesitation is fatal.

The phrase “kill chain” used to describe a process with steps. Now it’s more like a single, blinding flash.

Second, proliferation: The democratization of AI tech means that battlefield autonomy is no longer just for the big spenders. The same Nvidia Jetson board that powers a $1500 DJI drone can be bolted to a $200 plywood kamikaze drone or a $1000 homebuilt UGV. You don’t need a Pentagon budget, just a decent soldering iron and a GitHub repo.

Third, ambiguity: AI doesn’t care about uniforms, language, or national borders. Any group, state, proxy, insurgent, or teenager with a grudge can field semi-autonomous weapons with the right off-the-shelf parts.

For the first time, the United States and its vast military-industrial apparatus are learning how power, violence, and risk are distributed in an age where anyone can build a smart war machine.

Fourth, escalation: AI is accelerating the feedback loops that drive war itself. Drones get smarter in the field, algorithms update overnight, and yesterday’s defense is tomorrow’s vulnerability.

In the AI arms race, there’s no finish line, just a lot of frantic sprinting.

All this leads to a hard truth: most of what science fiction warned us about AI war turned out to be too slow, too cautious, and not nearly weird enough. Autonomous war is here, but it doesn’t look like a movie. It looks like millions of chips, sensors, and lines of code are being patched, rewritten, and hacked in real time by people who have skin in the game.

Forget killer robots marching in formation. Think instead of a digital arms bazaar, where the latest algorithm for target recognition can be stolen, copied, or improved upon within days.

Think of 15-year-olds in suburban Warsaw or Tel Aviv helping to write the code that decides which vehicle on a screen lives or dies. Think of a future where “lethality at scale” is just a line in a firmware update.

Maybe you’re reading this from a cozy office in Michigan, London, or Singapore, thinking, “This is fascinating, but it’ll never touch my life.”

Here’s the catch: the same algorithms that spot tanks in drone feeds are coming for your city’s traffic cameras, your insurance company’s risk models, and your neighborhood’s policing strategies. The pace of wartime innovation always leaks into civilian life, and the ethics, or lack thereof, often follow close behind.

More urgently, the world’s power balance is shifting, and it’s shifting around who can wield, disrupt, and adapt to AI-driven warfare the fastest. It’s not just about who can build the biggest tank anymore. It’s about who can code, deploy, and iterate lethal autonomy at scale, in the field, under fire.

This is not the dawn of some distant, abstract future. It’s the present tense of war, and it’s rolling forward whether or not you’re paying attention.

So, as you read this primer, forget the clichés: no Skynet, no evil superintelligence plotting world domination (unless you count some of the procurement offices I’ve met). Instead, focus on the messy, human, and very real ways that AI is reshaping violence, security, and the business of staying alive in the twenty-first century.

Welcome to the new arms race, where the best weapon isn’t the one with the biggest warhead, but the one that learns fastest.

The New Battlefield Vocabulary

Okay, we need to define a few key terms here that you can reference throughout this piece.

Let’s be honest: half the people on LinkedIn talking about “AI in defense” are really just shuffling acronyms until someone claps. The field is crawling with jargon, much of it copied and pasted from a Raytheon whitepaper or a Silicon Valley slide deck... Or worse, created by AI.

So, before we get further into this primer, let’s burn through the fog and lay out what these words actually mean; no hand-waving, no hype.

Artificial Intelligence (AI)

This is the big umbrella. AI is the catch-all label for machines doing things we once thought only humans could do: recognizing patterns, making decisions, learning from mistakes, even improvising. In military terms, AI is now the “brain” inside everything from drones to air defense radars to your favorite annoying chatbot.

Machine Learning (ML)

A subfield of AI, and the source of 80% of its current magic (and 90% of its PowerPoint buzz). ML means giving machines huge piles of data and letting them figure out the rules on their own. “Show me a thousand blurry photos of tanks, and I’ll start to recognize tanks on my own,” says the algorithm, before quietly upending your procurement plan.

Neural Networks (Neural Nets)

The workhorse behind the curtain. Neural nets are algorithms inspired by the human brain (but with fewer existential crises). They process data in interconnected layers, seeing, classifying, and predicting with an efficiency that would make your old statistics 101 professor weep. If you’ve seen a drone spot a camouflaged BMP from a thousand feet up, thank a neural net.

Autonomy

The holy grail, or the recipe for disaster, depending on who you ask. Autonomy means a weapon system can operate on its own, with no human pilot or gunner holding its hand. Think of a drone that picks its own targets, or a ground robot that patrols, detects, and evades without a joystick operator on the other end. Levels range from “do what I say” (remote control) to “wake me when it’s over” (fully autonomous).

Edge Computing

The secret sauce of real-time AI warfare. Instead of sending all your video feeds or sensor data back to a dusty server in Nebraska (AKA the cloud), edge computing means the processing happens right there, on the drone or robot itself. If a loitering munition can ID a tank, pick a route, and dive all before its comms get jammed, that’s edge computing doing its job.

Computer Vision

Getting machines to “see” and interpret visual data, images, video, IR feeds, and more. This is what lets a drone distinguish between a tank, a tractor, and a civilian minivan in the middle of a dust storm, all in real time. If AI is the brain, computer vision is the eyes, and it’s got 20/10 vision with night-vision goggles.

Loitering Munitions

Weapons that hover, wait, and watch for a target, then dive in for the kill, think kamikaze drone with a GoPro and a vendetta. Ukraine, Russia, Israel, and even the Houthis are now fielding these in numbers that would make any Cold War planner nervous. They blur the line between “drone” and “missile,” and nobody’s really sure what to call them anymore, so we get the term “loitering munition.”

Swarming

When you hear “swarming” in military AI, think less about bees and more about chaos on demand. Swarming is when dozens (or hundreds) of drones, UGVs, or boats coordinate as a team, overwhelming defenses, spreading confusion, and generally making life miserable for anyone with a radar. The future of battlefield mass? Not tanks, but cheap, networked, and disposable bots acting like a plague and communicating with other members of the swarm.

Kill Chain

Military jargon for the steps between “I see something I want to destroy” and “it’s now destroyed.” Traditionally: Find, Fix, Track, Target, Engage, and Assess. AI is turning the kill chain from a bureaucratic relay race into a 100-meter sprint, shrinking each link down to a fraction of a second. In modern war, the fastest kill chain wins. Everyone else gets a medal for participation (or a nice memorial).

Narrow AI vs. LLMs (Large Language Models)

Not all AI is created equal. Narrow AI is built to do one thing really well: recognize tanks, fly through woods, and jam radio signals. LLMs, like ChatGPT, Bard, or Gemini, are built for language: summarizing documents, answering questions, writing emails (or, yes, the occasional corporate apology). The AI on your drone is “narrow” and mission-focused; the AI writing your HR handbook is an LLM.

Mix up the two, and you either crash your drone or get a very apologetic missile.

There’s a reason why I define these terms. The gap between buzzword and battlefield is where most bad policy and worse journalism are born. If you can’t tell your edge computing from your edge lord, you’re not just missing the plot, you’re handing the narrative to the people who want it the least clear.

Keep these terms handy. The rest of this primer will use them like a classic guided missile uses GPS: constantly, sometimes aggressively, but always with purpose.

Categories of AI in Modern Conflict

You can’t throw a lithium battery in a warzone these days without hitting something running a neural net. But not all battlefield AI is created equal, or equally terrifying. Below are the main categories, each with its own flavor of chaos, promise, and bureaucratic flair.

Autonomous & Semi-Autonomous Systems

Drones (Air, Land, Sea), Loitering Munitions, UGVs, USVs

First up: the poster children of the AI war. You’ve seen the footage: quadcopter drones dropping grenades through open hatches, ground robots dragging wounded soldiers, sea drones menacing Russian ships in the Black Sea like angry Roombas with a grudge.

What’s changed is not only the hardware, it’s the brains inside. Autonomy now runs the spectrum from “semi” (think: pilot assists, waypoint navigation, obstacle avoidance) to “full Skynet audition” (loitering munitions that pick targets and attack with minimal, or sometimes no, human sign-off).

Air: In Ukraine, off-the-shelf FPV drones get AI upgrades that help them distinguish between tanks and decoys, even under heavy jamming or in poor light. Elsewhere, fixed-wing drones with onboard vision process sensor feeds in real time, making targeting decisions faster than most humans can say “suppressive fire.”

Land: Unmanned ground vehicles (UGVs) deliver ammo, evac casualties, or run point into minefields. Some are glorified RC cars; others, with edge AI modules, can follow visual cues, avoid obstacles, or even, if you believe the glossy marketing, return fire.

Sea: Uncrewed surface vessels (USVs) packed with narrow AI now patrol harbors, run recon, or ram adversary ships. Not quite “Hunt for Red October,” but give it six months.

Loitering Munitions: The not-so-subtle hybrid between drone and missile. They circle, scan, and strike. Onboard AI means they can wait for high-value targets, filter out false positives, and adjust for moving prey. Israel, Ukraine, Russia, and the US all use these, and if you’re a Russian logistics officer, you’re probably already sick of hearing the word “Lancet.”

Autonomy’s dirty secret: the more you add, the faster the war moves, and the less time anyone has to think about what just happened.

AI-Driven Command and Control (C2)

Data Fusion, Decision Support, Targeting, EW

Remember the image of a general hunched over a map with a cigar? Yeah, that’s gone. Modern C2 is about fusing sensor feeds, battlefield reports, drone video, and satellite imagery, then pushing all of that into algorithms that spit out targeting priorities, suggested moves, and sometimes, a new Spotify playlist for your morning commute.

Data Fusion: AI takes radar, IR, signals intelligence, drone feeds, and slams it all into a single operational picture. Ukraine’s “Delta” and “Kropyva” platforms, for example, let frontline units see (almost) everything in real time. If there’s a tank moving on the map, odds are an algorithm flagged it before a human did.

Decision Support: Not sure whether to hit that bridge or wait? Let the model run some wargames, calculate the odds, and make a suggestion. Some of these tools are smart… others, well, occasionally they “hallucinate” like a sleep-deprived intern.

Targeting: AI speeds up “find, fix, track” spotting targets, prioritizing strikes, even deconflicting airspace. A big leap from legacy “point and shoot” doctrine.

Electronic Warfare (EW): Machine learning now analyzes radio spectrum in real time, detecting enemy jammers, spoofing GPS, or even automatically launching countermeasures before your comms are cut.

C2 is where AI shifts from “tool” to “partner,” sometimes helpful, sometimes running off with your battle plan and returning with something entirely new.

Counter-AI and EW

Anti-Drone Tech, Jamming, Electronic Counter-Countermeasures

Of course, for every shiny new AI-enabled killer robot, there’s an equally eager adversary trying to make it crash, jam, or wander off and hit a tractor instead. Welcome to the new “arms race within the arms race.”

Anti-Drone: Shotguns and nets are out; AI-powered jammers, microwave guns, and rapid-targeting air defense systems are in. Systems like Germany’s Skynex or the US “Coyote” C-UAS use AI to spot, track, and down enemy drones, sometimes in swarms.

Jamming: AI algorithms sweep the spectrum, identify hostile signals, and deploy the right jamming technique, all automatically, all in real time. Russian and Ukrainian EW units duel daily, with AI helping adapt to changing enemy tactics faster than any human operator could.

Counter-Countermeasures: When both sides have AI, the game gets weird, machine learning adapts to enemy jamming, finds new frequencies, and reroutes comms or navigation on the fly.

It’s chess at machine speed, and nobody knows who’s winning until the pieces are all on the floor. Ten years ago, when I first started writing as a profession, I envisioned a day when AI would go out and fight AI, and all the humans just stayed home.

Are we there yet? No. But maybe in five years.

Information Warfare

AI-Powered Influence, Deepfakes, Botnets

Finally, not all AI battles are fought with explosives. The “gray zone” of modern war is filled with algorithms churning out influence ops, deepfakes, and armies of bots designed to sway opinion, destabilize governments, or just plain sow confusion.

Influence Operations: AI writes fake news, crafts viral memes, and even holds conversations on social media, posing as real people. During the early days of the Ukraine war, both sides used bots and sock puppets to push narratives, sow panic, and muddle the truth. Personally, I think Ukraine’s info ops are far superior to Russia’s.

Deepfakes: What used to take a Hollywood studio now takes an afternoon and a decent graphics card. Fake videos of leaders surrendering, doctored combat footage, or synthetic “proof” of atrocities circulate at warp speed.

Botnets: AI-coordinated swarms of social media accounts flood platforms, astroturfing opinion or spamming pro- or anti-war messages. It’s less James Bond, more Black Mirror, but the effect is the same: public confusion, paralysis, or, in the worst cases, incitement to violence.

The line between “combat” and “content” has never been blurrier, and the people at risk are often nowhere near the front.

AI in war is a sprawling ecosystem, from the missile streaking overhead to the troll farm spamming your DMs. Some of it is overhyped, much of it is underreported, but all of it is moving faster than the human eye, or mind, can comfortably follow.

Next time you see a headline about “AI revolutionizing warfare,” check which category they mean. Odds are, it’s more than one.

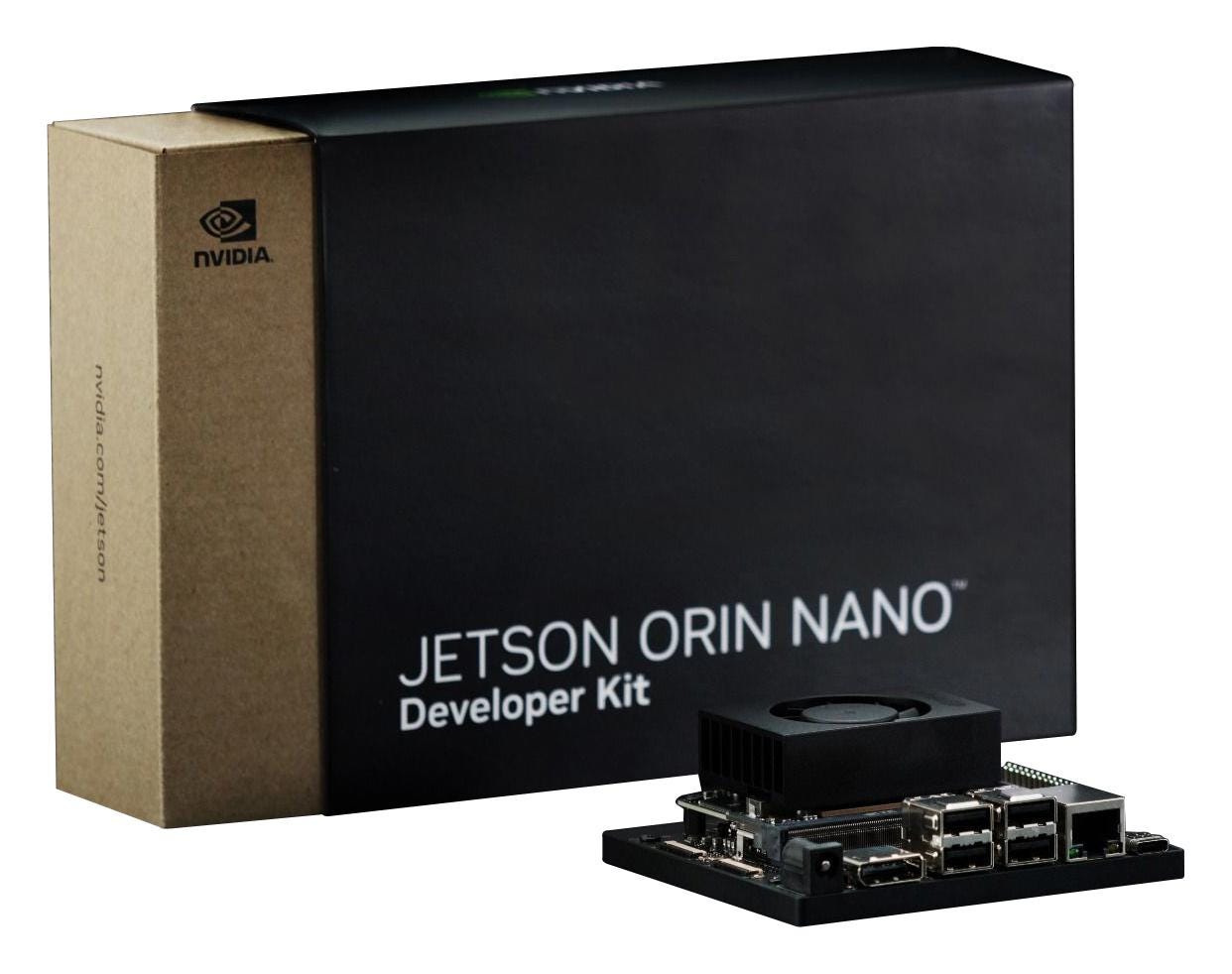

Case Study: The Nvidia Jetson Orin, The Brain Inside the Drone

Imagine you’re standing in Ukraine. The sun’s barely up. The world is quiet, until a six-foot-long Iranian-designed Shahed drone buzzes overhead, then banks sharply, ignoring GPS jamming and friendly decoy flares, and plunges toward your ammo dump with all the subtlety of a bowling ball hurled through a stained-glass window.

It just picked its own target, in real time, because inside its carbon-fiber skull sits a little green board: Nvidia’s Jetson Orin.

Jetson Orin isn’t a supercomputer in the sci-fi sense, but on the battlefield, it’s the closest thing you’ll find to a plug-and-play “war brain.”

Originally intended for things like self-driving cars and smart security cameras (you know, peaceful stuff), the Orin packs a multi-core ARM CPU, a muscular GPU (think: hundreds of little math wizards), neural processors, and enough RAM to embarrass most Best Buy laptops. What matters most? All this horsepower fits in a device smaller than your phone and sips just a few watts of power, perfect for drones, robots, loitering munitions, or, apparently, whichever project the Russian MOD scraped off AliExpress last week.

What Makes Orin Special?

Jetson Orin excels at one thing: running AI models, fast, right at the edge, with no internet required. Need a drone to spot tanks through haze, dodge electronic jammers, or identify an ammo truck in a moving convoy? Orin chews through video frames in milliseconds, running neural nets trained on a diet of war footage, civilian imagery, and whatever else the engineers could scrape from YouTube. It’s real-time perception, targeting, navigation, and sometimes, a dash of tactical improvisation.

Where older drones had to phone home for orders (“Is that a tank or a bus?”), an Orin-powered drone makes that call itself, and if you’re underneath, you’ll feel the result before anyone at HQ can weigh in.

Until recently, the Shahed-136 was basically a flying lawnmower with a bomb strapped to it. Its targeting was so primitive that most Ukrainian soldiers could outsmart it with a bit of camouflage and a little luck. But now, the Kremlin has unveiled the “MS001” upgrade.

According to Ukrainian military intelligence and open-source analysts, Russia’s new Shahed variant now carries an Nvidia Jetson Orin module.

This isn’t speculation, it’s been spotted in wreckage and confirmed in at least one intercepted Russian procurement document. The upgrade lets the drone process sensor feeds (video, thermal, radar) on the fly, spot vehicles or structures using onboard computer vision, and prioritize targets based on what it “sees.”

If GPS is jammed or spoofed, Orin’s neural nets keep the drone on course using visual terrain mapping, matching the landscape below with preloaded imagery, like a cruise missile that learned to do its own homework.

The scariest part? This AI doesn’t just guide the drone; it chooses targets. If there are multiple vehicles below, the Shahed can decide which is most valuable, all without calling home for permission.

In the old days, targeting logic was a glorified game of “hot or cold.” Now it’s more like “Which truck gets the honor today?” decided in the air, in the blink of an eye.

Ukraine’s (and Everyone’s) New Normal

Ukraine isn’t sitting still, either. While Russia is busy hot-rodding Iranian drones, Ukrainian engineers are strapping Jetson Orins to everything from cheap FPV quadcopters to prototype unmanned ground vehicles.

The goal? Cheap, modular autonomy: if you can buy the board, you can build the “brain.” Some Ukrainian FPVs now run object-detection models that flag targets, correct for wind or GPS loss, and even help rookie pilots land grenade drops with pinpoint accuracy, no call to Silicon Valley required.

The same Jetson modules are showing up in Israeli loitering munitions, American swarming drones, Turkish attack UAVs, and even a few prototypes rolling off 3D printers in startup basements from Ankara to Austin. Why? Because the Orin strikes the perfect balance: enough AI power to matter, small and cheap enough to deploy at scale, and open enough to program with whatever neural net you like. It’s the AK-47 of battlefield brains: accessible, rugged, and endlessly hackable.

The Real World Impact

Here’s what all the glossy brochures and breathless tweets don’t tell you: The Orin isn’t “smart” in the way science fiction promised. It doesn’t ponder the ethics of war, write poetry, or plot world domination.

What it does is turbocharge the pace of violence, giving every drone, missile, or ground robot the ability to “think” fast, adapt on the fly, and decide, sometimes lethally, in the time it takes a human to blink.

It also levels the playing field. Ten years ago, autonomy was a Pentagon luxury. Today, anyone with a credit card, a soldering iron, and an internet connection can build a drone that, at least for a few crucial seconds, outsmarts a million-dollar tank or air defense system.

The phrase “democratization of violence” gets thrown around a lot, but Orin makes it literal. You can actually buy one on Amazon right now.

A Brief Warning About Sanctions, Ethics, and the Next War

Of course, none of this is happening in a vacuum. Nvidia would rather you use the Orin to automate your hydroponic tomatoes than blow up a fuel depot. Western sanctions officially prohibit shipping AI chips to Russia, Iran, or other “problem children.”

But the world is awash in gray-market tech, and the learning curve for weaponizing commercial AI modules is embarrassingly short. Every time a Russian Shahed smashes into a Ukrainian ammo dump, you can bet someone in a Palo Alto boardroom winces, and someone in Moscow updates their shopping list.

What does this all add up to? The Orin isn’t the only “AI brain” in the global arms race, but it’s the most accessible, the most widely hacked, and the most directly responsible for the new tempo of modern war. Whether you’re a defense minister or a kid building drones in your garage, the Orin is the neural heart pumping blood through a new generation of autonomous weapons.

How Battlefield AI Gets Built

If you’re picturing AI as some black box that’s delivered from a secret lab, stamped “Approved for Mayhem,” and plugged straight into a drone, let’s pull back the curtain. Battlefield AI isn’t magic; it’s sweat, hustle, and a surprising amount of grunt work with spreadsheets, soldering irons, and, yes, a lot of human judgment before a machine ever “decides” anything.

Model Training: Teaching the Machine to See War

The first step in making a drone smart isn’t code, it’s data. Not “Silicon Valley demo day” data. We’re talking dirty, blurry, sometimes blood-spattered real-world images and sensor feeds straight from the front. Here’s how the sausage gets made:

Where the Data Comes From

Drone footage: The good, the bad, and the pixelated. Ukraine is sitting on mountains of first-person video from FPVs, quadcopters, and even old GoPros taped to a broomstick. Russia and everyone else are hoarding their own, sometimes swiped from Telegram or YouTube.

Thermal, IR, and radar: Nighttime firefights, fog, and all-weather targeting mean training data can’t just be daylight, high-def, and picturesque.

Open source: Satellite imagery, TikTok, social media, Google Street View (don’t laugh, it’s been used).

Captured enemy footage: “If you can’t beat ‘em, label their videos.” Nothing says “joint learning environment” like repurposing the opposition’s drone kills for your own neural net.

Annotation: No One Escapes the Labeling Gulag

Humans draw boxes: Literally. Dozens (sometimes hundreds) of digital laborers click, drag, and outline every tank, truck, trench, or sheep that wanders into the frame. Sometimes it’s soldiers. Sometimes it’s tractors (the real Ukrainian MVPs).

Supervised learning: The AI learns by example: “This blob is a T-72, that’s a burnt-out BMP, ignore the cow.”

Validation: Keep some data back for testing. If your model starts thinking every hay bale is a tank, it’s back to the drawing board.

Your model is only as good as your data. If your drone was only trained on sunny days, good luck when the mud flies and the snow falls. Real battlefield footage is messy, so your AI has to learn to thrive on chaos.

Model Optimization: Getting Genius into a Shoebox

Now comes the hard part: getting your newly trained “tank spotter” to run on a drone with the computing power of a smart toaster.

Miniaturization (Quantization, TensorRT, etc.)

From big brains to small chips: You train on giant Nvidia servers, A100s, H100s, racks of humming hardware that cost more than a suburban home. But your drone? It’s working with a Jetson Orin, a Raspberry Pi, or, if you’re really living dangerously, an off-brand Android board from AliExpress.

Quantization: This is AI lingo for “shrink it ‘til it fits.” You take all those fancy 32-bit neural net weights and crunch them down to 16-bit or 8-bit, losing a smidge of precision but gaining a ton of speed and saving battery life.

TensorRT and friends: Nvidia’s magic toolkit. It fuses layers, strips out inefficiency, and compiles your model into a streamlined, GPU-ready “engine” that can run in real time, no cloud, no internet, no excuses. TensorRT is Nvidia’s proprietary, high-performance, deep learning inference optimizer and runtime. Translation: It’s specialized software that takes a trained AI model and makes it run much faster and smaller, with minimal loss of accuracy, on Nvidia GPUs (including Jetson Orin).

Why Edge AI is the Only Game in Town

Comms are for suckers: If your drone has to send video back to HQ for analysis, you’ve already lost. Russian jamming, Ukrainian EW, Iranian sandstorms; pick your poison, but nobody’s comms are safe.

Milliseconds matter: The window between “spot target” and “get shot down” is closing fast. AI has to live on the drone, decide on the drone, and act on the drone. Anything else is a nostalgia act.

Survivability and scale: Edge AI means you can deploy thousands of autonomous or semi-autonomous systems, each running independently, adapting in the field, and learning as they go.

Upgrades on the fly: New model? Patch and go. War is the ultimate software update environment.

What sets modern battlefield AI apart is the brutal, relentless process of gathering real war data, teaching machines to survive in the chaos, and then grinding those brains down to fit inside hardware you can tape to a homemade drone. The result isn’t always pretty; mistakes are made, friendlies get spooked, hay bales get a lot of extra attention. But the speed at which this cycle repeats: train, optimize, deploy, repeat… is what’s fueling the AI arms race.

In the end, whoever can teach, shrink, and field their AI the fastest doesn’t just win the tech; they start controlling the tempo of the battle itself.

LLMs vs. Narrow AI: Know the Difference

Let’s clear the air before your boss, your favorite pundit, or your least favorite defense consultant tells you, “AI is AI, it’s all the same.” Spoiler: it’s not.

The gap between ChatGPT and the kind of AI you’ll find in a drone over Ukraine is philosophical, practical, and, if you get it wrong, occasionally explosive.

Why ChatGPT Won’t Be Flying a Drone Any Time Soon

You’ve probably seen the headlines: “AI Writes Novels, Paints Pictures, Passes the Bar Exam, And Now Runs the War.”

Let’s hit the brakes. The AI behind viral chatbots, Large Language Models (LLMs) like ChatGPT, Gemini, and Claude, are linguistic powerhouses. Feed them news stories, legal questions, or a Taylor Swift lyric, and they’ll give you paragraphs for days.

But put an LLM in charge of a drone strike and you’re in for a slapstick episode:

LLMs generate and interpret language, they don’t process video feeds, analyze IR spectra, or steer a loitering munition through jamming. They’re trained on terabytes of text from the internet, not terabytes of battlefield footage. And they need beefy data centers and LOTS of RAM, hardly ideal for something duct-taped to a $300 quadcopter.

Could you, in theory, have a drone “talk” to an LLM for mission updates or after-action reports? Maybe.

Should you trust it to ID a T-90 in a snowstorm at 90 km/h, avoid decoys, and decide who lives or dies?

Only if you want your after-action report to include a “tragic misunderstanding involving a herd of goats.”

What “Narrow AI” Means for Weapon Systems

Weaponized AI is “narrow,” meaning it’s built for a specific, high-stakes job.

One model spots vehicles in thermal imagery. Another navigates through jamming by matching terrain features to a map. Yet another allocates targets among a drone swarm, or optimizes firing angles for a loitering munition.

Narrow AI doesn’t compose poetry or answer trivia, it does one thing, does it fast, and ideally, does it right. The reason it matters:

Speed: Narrow AI is ruthlessly optimized for millisecond response. No waiting for the cloud, no spinning up extra RAM.

Footprint: Can be miniaturized and deployed on tiny, rugged, low-power hardware, think Jetson Orin, not an Amazon server farm.

Reliability: Trained on exactly the kind of data it will see in the field, night vision, snow, explosions, bad comms, and all.

Security: Doesn’t need to “phone home” for every decision, so it survives jamming, spoofing, and intermittent communications.

In other words, the “AI” that’s winning wars right now is not the same AI that won Jeopardy! or got tricked into confessing it was a toaster. It’s the silent, job-focused, sometimes brutal specialist that’s been trained, tested, and battle-hardened for exactly one purpose. No distractions, no digressions, no existential dread.

Here’s why this matters for anyone covering or thinking about modern war:

Confusing the two leads to bad policy, bad journalism, and occasionally, bad targeting. Narrow AI will keep getting smaller, faster, and more lethal… and proliferate everywhere, because it’s relatively cheap and modular.

LLMs will reshape analysis, planning, and maybe even the news you read about the war, but they’ll never replace the on-the-fly, microsecond judgment that real combat autonomy requires.

So the next time you hear, “AI is running the war,” ask: Which AI?

If it’s not seeing, sensing, and deciding at the edge, it’s just another chatbot, no matter how many think pieces it writes.

Old Tech, New Brains: Legacy Systems vs. Modern AI

It’s tempting to look at today’s AI-powered drones and think, “We’ve always had smart weapons, right?”

Well, sort of, if your definition of “smart” is a 1990s GPS chip and a map stuffed into a Tomahawk cruise missile. But comparing yesterday’s legacy systems to modern battlefield AI is like comparing a landline to a smartphone.

Both make calls; only one ruins your sleep schedule.

A good example of this difference would be to compare the Tomahawk cruise missile, the hero of the first Gulf War, to modern AI weapons.

Tomahawk vs. AI Drone: Kill Chain Speed and Adaptability

Tomahawk (The Legacy Marvel):

The Tomahawk is the Model T of precision strike: pre-programmed route, terrain-contour matching, “seek and destroy” guidance, and an onboard computer about as flexible as your grandpa’s remote control.

Target coordinates are baked in before launch, change the plan mid-flight, and, well, hope you packed a second missile.

The kill chain (Find, Fix, Track, Target, Engage, Assess) in the Tomahawk era went like this:

Human finds target

Human plans mission

Missile is launched

Missile follows its orders, no backchat, no rerouting, no improvisation

Result: impressive, but slow to react and inflexible when things change.

AI Drone (The Modern Upstart):

Today’s AI-enabled drones, think Shahed MS001, Ukraine’s FPVs, or some newer loitering munitions, are built for on-the-fly adaptation.

The kill chain shrinks:

Drone is launched

Onboard AI searches, identifies, and selects the best target in real time

Navigation, targeting, and even strike decisions can be made or updated mid-mission

All this happens at “machine speed:” milliseconds, not minutes

If the tank moves, if GPS gets jammed, or if the weather changes, a well-trained AI drone can compensate, reroute, and strike anyway. It doesn’t panic, doesn’t wait for instructions, and (most of the time) doesn’t confuse a hay bale for a T-90.

Speed and Adaptability:

The old process was linear, bureaucratic, and plodding. The new process is fast, recursive, and ruthless. The Tomahawk’s kill chain was “fire and forget.” Today’s AI kill chain is “fire and keep learning.”

If a Tomahawk’s orders are outdated by the time it reaches the target, you’re out a million bucks, and your bridge is still standing. If an AI drone’s orders are outdated, it recalibrates and tries again before you even notice it’s overhead.

What’s Changed in OODA Time?

You’ve probably heard about the OODA loop: Observe, Orient, Decide, Act. It’s the classic model for rapid decision-making, drilled into military doctrine since before Curtis LeMay was terrorizing JFK.

Legacy Systems:

OODA loops were slow and required lots of human eyes and hands.

Each step took time, minutes, hours, sometimes days, because every observation and decision went up and down the command chain.

Modern AI Systems:

Now, the drone itself observes, orients, decides, and acts in a fraction of a second.

Humans are often only “on the loop” for oversight, not in the loop for every decision.

That means fewer delays, less friction, and, uncomfortably, fewer chances to stop a mistake before it happens.

The speed advantage is a survival edge.

On a battlefield where the side that acts fastest usually wins, AI-driven weapons compress the OODA loop until the enemy is always one step behind. In Ukraine, Russian convoys sometimes don’t make it to the next village because a $500 drone identified, prioritized, and attacked them faster than headquarters could issue a warning. In Israel, loitering munitions have shifted from “one missile, one target” to “one swarm, one battlefield,” hitting what needs hitting as conditions evolve.

Old tech was “smart” for its time, but it played by the rules of slow, careful, hierarchical war. Modern AI is rewriting SOP on the fly, at the speed of silicon, and daring everyone else to catch up.

Who’s Building the Future of AI Weapons?

There was a time when the phrase “military technology” conjured images of top-secret bunkers, men in white lab coats, and budgets large enough to make a Fortune 500 CEO weep. Today, the field is crowded with state giants, private disruptors, and, just as often, twenty-somethings in a garage, assembling weapons that look like Kickstarter projects with a body count.

Here’s who’s actually shaping the AI arms race.

The Big Players (a.k.a. The Kids Who Never Left the Science Fair)

United States

Let’s get this out of the way: The US remains the granddaddy of defense tech, with an ecosystem so bloated it can bankroll both innovation and MASSIVE bureaucracy at once.

Here are the big US players:

Lockheed Martin, Northrop Grumman, Raytheon, Boeing, Palantir, Anduril, Shield AI, AeroVironment, Skydio, General Atomics, and a tech-industry cast that includes Google and Microsoft (sometimes after congressional hearings).

Here’s what they’re building:

Loyal Wingman drones, swarming munitions, C-UAS, collaborative autonomy, and the Replicator Initiative. If it moves, sees, or shoots, there’s a think tank in D.C. claiming they invented it.

China

China’s playbook: Out-spend, out-scale, and, when possible, out-copy.

Who: AVIC, CASIC, Norinco, Chinese Academy of Sciences, a spiderweb of startups, and People’s Liberation Army labs that don’t like journalists much.

What: Stealth drones (CH-7), AI swarm demos, hypersonic missiles, “intelligentized warfare” as a doctrine. Don’t ask for technical details, but assume the best, and the worst, are being exported.

Russia

If there’s a shortcut to fielding AI, Russia will find it and then post the blueprints to Telegram.

Who: Kalashnikov, Almaz-Antey, Kronstadt, the Russian MOD’s own labs, plus a steady diet of “imported” tech.

What: Lancet and KUB loitering munitions, Orion drones, Shahed “MS001” with Jetson Orin (despite sanctions), EW systems built with equal parts cunning and duct tape. The difference: Russia tests in the field, learns (painfully), and iterates faster now than they did in 2022.

Ukraine

No nation has “move fast and break things” tattooed on its military-industrial complex like Ukraine.

Who: Defense startups (hundreds of them), Army innovation units, the Brave1 cluster, Diia.City hackers, and a legion of tinkerers trading notes on Signal.

What: AI-powered FPV drones, open-source battlefield apps (Delta, Kropyva), field-modified UGVs, jammers, DIY counter-drone kits. If you can code it, print it, or bolt it to an e-bike, it’s in play.

Israel

Quietly doing more with less and also exporting everywhere.

Who: Rafael, Elbit Systems, Israel Aerospace Industries, plus a startup scene you wouldn’t believe unless you’ve been.

What: Harpy/Harop loitering munitions, SkyStriker, Carmel AFV project, Iron Dome upgrades, AI for C4ISR and EW. Most of the world’s “smart” drones have Israeli fingerprints somewhere in the code.

Turkey

The disruptor, now firmly established.

Who: Baykar, Aselsan, Roketsan, STM, and a growing army of smaller shops.

What: Bayraktar TB2 and Akıncı drones, Kargu-2 kamikaze UAVs with facial recognition, mass-produced swarming bots, C-UAS tech.

M.O.: Build cheap, ship fast, export everywhere conflict is brewing.

The Dark Horses & Wildcards

South Korea

Brutally efficient: robot border guards, autonomous naval and air drones, and real-world deployments that would give most NATO lawyers a heart attack.

France, UK, Germany

Joint Euro-projects (FCAS, Eurodrone, GMARS), swarming experiments, AI-enabled targeting, all with just enough regulation to slow things down to a brisk crawl.

Iran

The global leader in “it’s not much, but it’s honest work.” Shahed drones with AI brains, low-cost loitering munitions for proxy wars and export, and a knack for getting around sanctions with third-party chips.

India, Australia, Singapore, Saudi Arabia, UAE

All rushing to join the party, funding drone swarms, C-UAS, and homegrown autonomy programs. Some will punch above their weight, especially as tech gets cheaper and more modular.

Commercial vs. State Innovation: The Garage Strikes Back

For every headline about a defense mega-contract, there’s a counter-narrative:

The garage innovator: Ukrainian teenagers with a 3D printer and a credit card, hacking together FPV drones that outfox Russian EW gear.

The open-source collective: Volunteers and hobbyists, sometimes in different time zones, developing jamming-resistant software or vision models faster than defense contractors can schedule a meeting.

The mercenary tech firm: Palantir and Anduril offering “AI-as-a-Service” to whoever can pay, sometimes leapfrogging entire procurement cycles.

Even US Special Operations Command has learned to shop at Best Buy (or AliExpress) when the bureaucratic machine can’t deliver on time.

The AI weapons revolution isn’t top-down or bottom-up; it’s both.

The world’s biggest defense budgets are racing against the world’s best hackers. The winner? Usually, the one who can update the firmware fastest, iterate in the field, and isn’t afraid to break the rules (or a few export laws) along the way.

Okay, let’s start to wrap up this massive article.

You might think, “Surely, someone’s in charge here, right?”

Not quite.

Western governments try to keep AI chips, software, and advanced algorithms out of hostile hands. Sanctions are in place; end-user certificates are signed. But in the real world, Jetson Orins show up in Russian drones, Chinese vision chips power Iranian weapons, and most battlefield code can be found on GitHub if you know where to look.

The very tools that drive civilian AI progress (TensorFlow, PyTorch, YOLO) are fueling the battlefield faster than export controls, legal threats, or ethical appeals can keep up. Ukraine and Russia both modify, share, and sometimes outright steal the same public code.

Anyone with a laptop, a few hundred bucks, and an attitude can build battlefield AI. Farewell, “state-on-state” war. Non-state actors, proxies, and even hobbyists are in play.

So what’s the danger?

Loss of control: When AI weapons proliferate, “owning” escalation, accountability, or even basic war planning gets harder.

Ethical whiplash: What happens when a $200 drone built in a garage makes a kill decision, no rules, no human sign-off, no Geneva Convention?

Pace outruns law: The world’s legal and ethical frameworks for war, already patchwork, aren’t designed for weapons that can update themselves and spread faster than the next treaty can be drafted.

AI’s biggest “threat” isn’t that it will go rogue and nuke the planet. It’s that it will make war too fast, too flexible, and too widely available for old-fashioned deterrence or even simple accountability to keep up.

This arms race doesn’t end with the biggest bomb; it ends when every player, state, startup, or lone hacker can build, field, and iterate AI faster than their adversaries can defend or regulate.

I mean, how do you even hold an AI accountable for an alleged war crime? Nobody is asking these questions…

The Fastest Kill Chain Wins, But At What Cost?

If you’ve made it this far, congratulations, you now know more about AI in modern warfare than most government officials and half the “defense consultants” on cable news.

We’ve built a world where the pace of violence is measured not in months or days, but in milliseconds. The kill chain isn’t a chain anymore. It’s a blur, a circuit, a feedback loop, an algorithmic reflex where the side with the quickest “see-decide-strike” cycle stacks the bodies, holds the ground, and writes the after-action reports.

AI’s trajectory in war isn’t a straight line; it’s an arms spiral. Every advance in autonomy, every patch or update, every clever workaround doesn’t just solve yesterday’s problems; it spawns tomorrow’s headaches. Sure, militaries have always chased speed. But never before have machines learned, adapted, and escalated faster than their human masters could draft the standard operating procedure. Today, the battlefield is a live laboratory, and the experiments rarely wait for ethical review.

So, speed is king. But the new kingdom is messy.

Autonomy promises efficiency and lethality; drones that strike without flinching, munitions that outthink their targets, command systems that process more data than a hundred staff officers ever could.

But speed and autonomy have a dark side. Decision loops so tight there’s no room for regret, legal review, or second thoughts. Mistakes move as fast as intentions.

Escalation can be a matter of software, not statecraft.

Here’s the part they don’t put on the glossy brochures or defense trade show banners: the new arms control problem is about controlling the velocity of war itself.

In an age when algorithms decide who gets hit and who gets spared, accountability gets slippery.

So, where do we go from here?

Militaries will keep iterating, because they must. As long as one side can shrink the kill chain and push more autonomy to the edge, the other side has no choice but to follow. The only real ceiling is how fast humans can adapt their doctrine, law, and ethics to keep pace with their own machines.

Policymakers and the public need to get much, much smarter. Not just about the headlines (“AI is here!”) but about the realities on the ground: how these systems work, how they fail, and how quickly today’s breakthrough becomes tomorrow’s global risk.

Transparency and accountability must catch up, or at least try. If we can’t stop the arms race, maybe we can at least name it, track it, and demand some minimal rules of the road. Maybe.

And for everyone else, civilians, techies, journalists, garage tinkerers, the lesson is simple: The boundary between peace and war, between innovation and escalation, has never been thinner or more crowded. The future will belong to those who can see the dangers coming before the machines do.

AI in war is the ultimate double-edged sword. It speeds up everything, including our best intentions and our worst mistakes.

The challenge goes far beyond “keeping up”, but we need to decide, before the machines do it for us, what’s actually worth doing at the speed of war.

Слава Україні!

Terrific effort Wes! It brings to mind reading a book about 40 years ago by Alvin Toffler. It was titled "Future Shock" and its premise was that the pace off change would accelerate until people could no longer absorb the changes in time to react to them. What you are describing is a phenomenon that is approaching that state........fascinating. I always wondered how it might manifest itself.

Wow! Awesome article. I really knew nothing about AI in war, or AI in general, before reading this article. I am amazed at how quickly AI has evolved. Makes me wonder what the next few years will bring. The massively bloated defense budget of the U.S. could (but probably won’t) be reduced. Just wow!